Posted by PNY Pro, May 03, 2019

As a PC enthusiast, I love pitting hardware solutions against each other to determine their relative performance when completing a particular task. This process is also known as “Benchmarking.” Benchmarking results are usually considered the best tool to evaluate the merits of competing systems when making a purchase decision.

In this 3-part blog series, we’ll discuss how to build a system, with an emphasis on benchmarking GPU performance for Deep Learning using Ubuntu 18.04, NVIDIA GPU Cloud (NGC) and TensorFlow.

The blog series will proceed as follows:

Part One: Introduction

Part Two: Hardware Consideration

Part Three: Software Setup

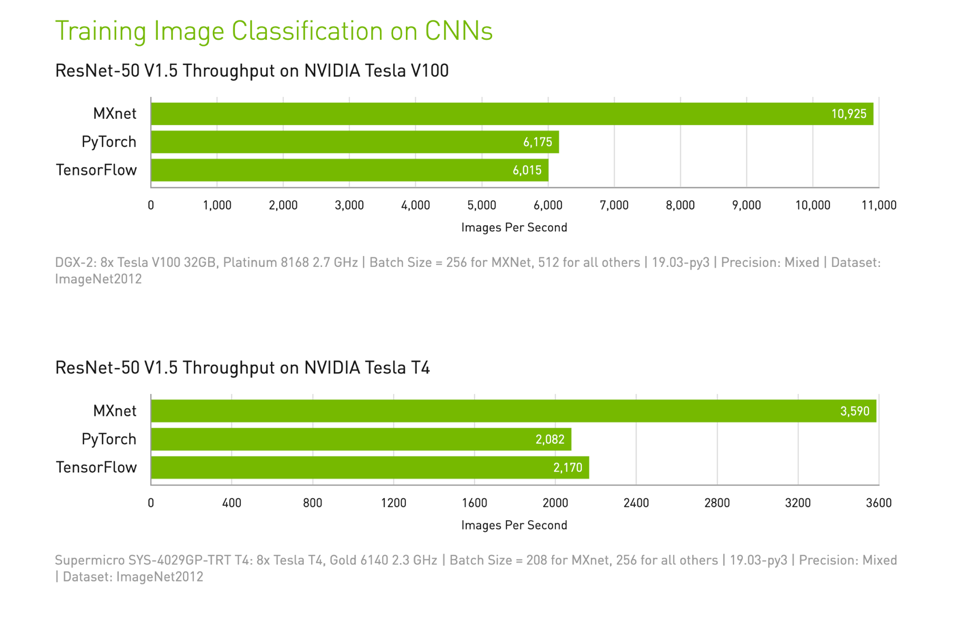

Most gamers are familiar with benchmarking tools such as 3DMark and Unigene Superposition, while professionals rely on trusted suites such as SPECviewperf and PassMark. When it comes to Deep Learning benchmarks, the best-known “yard stick” is training a ResNet-50 neural network using ImageNet dataset, more specifically the ImageNet 2012 dataset for image classification.

Companies focused on Deep Learning often use this metric to measure and compare compute performance. This metric appeared in a recent NVIDIA blog which w compared different Deep Learning framework performance. You can learn more about this at: https://developer.nvidia.com/deep-learning-performance-training-inference

Deep Learning is evolving rapidly, so this metric isn’t a full representation of Deep Learning performance. However, it is useful and relevant since it gives us a fair standard to compare “images per second” results.

I should note that Dr. Kinghorn from PNY’s partner Puget Systems wrote about this subject a year ago; his blogs are a useful guide for beginners and offer useful tips for advanced users as well. His efforts are highly recommend. Take the time to read his original 5-part blog

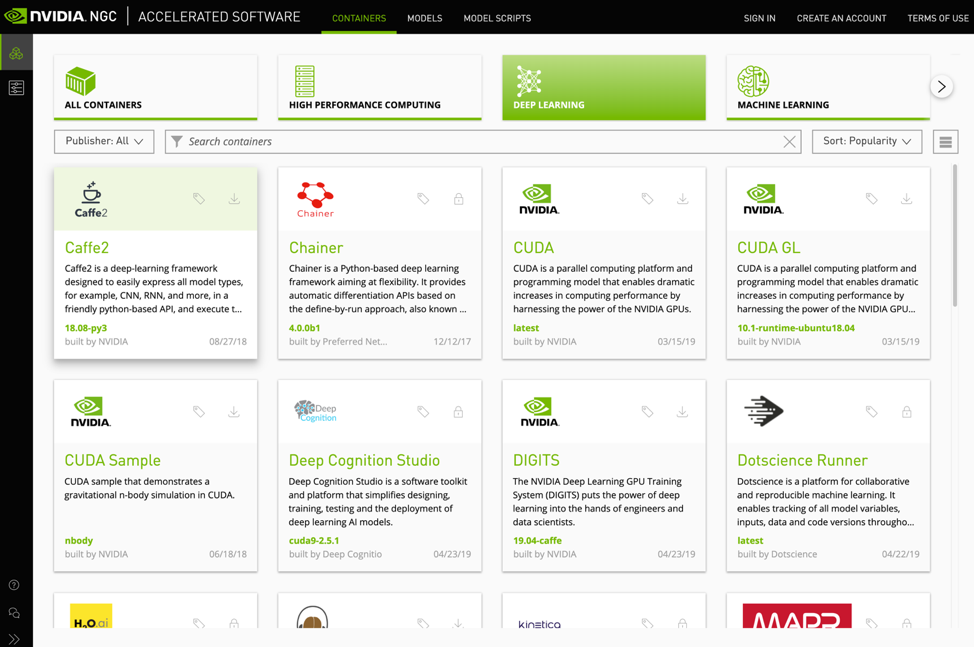

Introduction to NGC.

The secret weapon for building this workflow benchmark is the NVIDIA GPU Cloud, or NGC.

NGC is a free Docker container registry, a collection of the top software for accelerated data science, machine learning, and analytics. Popular frameworks available on NGC include TensorFlow, PyTorch, Caffe2 and many others. You can browse the NGC registry by visiting this link: https://ngc.nvidia.com/catalog, no registration is required.

So, what is a container? According to Docker.com:

“A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.” https://www.docker.com/resources/what-container

Given this definition a container is a controlled software environment where all the software is designed to work well together. By using a Docker container, you are ensuring your software environment is consistent between tests and dependency error-free across various libraries. NGC containers have the added advantage of being NVIDIA optimized, validated and GPU accelerated, so they will work well with NVIDIA GPUs.

Why must you utilize a Linux system? At present, NGC only works using Linux.

Before we dive into hardware considerations in the next blog, visit the NGC registry and get a sense of the diverse suite of containers available. Then visit PNY’s Artificial Intelligence landing page for more information about how NVIDIA GPUs are changing the world.